Jeff Davis has nearly 20 years experience in Software Development. Way back when, he started as an self-taught Oracle DBA (his Master's degree is in Security Policy Studies, as in Political Science), and for the past 5 years or so, has focused heavily on web services enablement, enterprise integration, and open-source enterprise solutions.

Monday, December 3, 2007

New interesting book on WS-BPEL

The first section of the book, which I found particularly useful given my lack of PHP experience, primarily focuses on how to consume and build SOAP web services with PHP. Using PHP with SOAP doesn't seem as straightforward as it should be, but Yuli does a good job at describing the process and best practices. The remaining sections cover BPEL, and ActiveBPEL's implementation of them.

The book is fairly brief in size (280 pages), but covers a lot of material in an effective fashion. I would recommend it to anyone interested in using PHP with SOAP and/or ActiveBPEL (the BPEL portions are beneficial even if you are not a PHP developer).

jeff

Saturday, December 1, 2007

SOA Essentials, Part 1

For instance, let’s assume Acme Company, as a disciple of SOA, and has created a multitude of such services, including CRM functionality such as creating contacts; inquires/cases; email correspondence; action items and the like. Now, in an effort to improve and streamline lead generation they want to develop a new web-based form so that anyone interested in their products or services can easily place an inquiry. The previous form would only send the inquiry details to an email distribution list that was poorly monitored and tracked. Further, they prospects information would have to be re-keyed into the CRM system manually, which was very tedious (what I call “swivel-chair integration”). With the new inquiry form, they want to use their SOA services to automatically perform the following business process:

- Create a new Contact in their CRM system.

- Create a new CRM “Case” using the data provided within the form and assign that Case to an appropriate sales team member (perhaps based on geographic business rules).

- Create a new “Activity” for the sales team member, which is analogous to a to-do item to follow-up on the inquiry.

- Automatically generate a response email to the prospect with their Case # and sales team member.

- Create an entry in their internal Training Management System (TMS) so that the prospect is automatically notified of any upcoming webinars or seminars.

- Create an event into their Business Activity Monitoring (BAM) system, which is carefully monitored by company executives as part of their Key Performance Indicators (KPI).

Since Acme has developed a repository of reusable services, they can now respond quickly to market forces by creating new application and processes in a fraction of the time otherwise required in absence of an SOA.

SOA, being the latest in a string of IT “silver bullets, has been subject to a lot of misuse and bastardization. In part, this is due to the eagerness of vendors to associate their products with this new architecture style. Many of the common definitions of SOA do share important characteristic:

“Contemporary SOA represents an open, agile extensible, federated, composable architecture comprised of autonomous, QoS-capable, vendor diverse, interoperable, discoverable, and potentially reusable services, implemented as Web services” (Erl, Service Oriented Architecture, 2005, pg 54).

“Service-Oriented Architecture is an IT strategy that organizes the discrete functions contained in enterprise applications into interoperable, standards-based services that can be combined and reused quickly to meet business needs.” (Domain Model for SOA: Realizing the Business Benefit of Service-Oriented Architecture. BEA White Paper. 2005)

As you can see, the common theme is obviously the notion of discrete, reusable business services that can be used to construct new and novel business processes or applications. The history of IT is replete, however, with component-based frameworks that attempted similar objectives. They include Common Object Request Broker Architecture, or CORBA, along with Microsoft’s Distribute Component Object Model (DCOM) and Java’s Enterprise Java Beans (EJB). What distinguishes these approaches from the newer SOA? There are several:

- CORBA, EJB and DCOM are all based on remote-procedure call (RPC) technologies. This created tight dependencies between the client application and the underlying RPC components. The RPC components were, by nature, very narrow in scope and usually tailored specifically for use by a single application, thus limiting reusability. Also, RPC solutions tended to generate significant network traffic, and suffered from complications in transaction management (i.e., two-phase commits etc).

- In the case of EJB and DCOM, they are both tied to specific platforms and were thus not interoperable. Unless a homogenous environment existed (rare in today’s enterprises, which are often grown through acquisition), the benefits from them could not be easily achieved. SOA-based web services were designed with interoperability in mind.

- Complexity.CORBA, EJB,and to a lesser degree DCOM, were complicated technologies that often required commercial products to implement. SOA can be introduced using a multitude of off-the-shelf, open-source technologies.

- SOA relies upon XML as the underlying data representation, unlike the others which used proprietary, binary-based objects. XML’s popularity is undeniable, in part because it is easy to understand and generate.

Another distinction between an SOA and earlier component-based technologies is that an SOA is more than technology per se, but also constitutes a set of principles or guidelines. This includes notions such as governance, service-level agreements; meta-data definitions, and registries. These topics will be addressed in greater detail in the sections that follow.

So what does an SOA resemble conceptually? Figure 1.1 depicts the interplay between the backend systems, exposed services, and orchestrated business processes.

As you can see, service components represent the layer atop the enterprise business systems/applications. These components allow the layers above to interact with these systems. The composite services layer represents more course-grained services that are comprised from two or more individual components. For example, a “createPO” composite service may include integrating finer-grained services such as “createCustomer”, “createPOHeader”, and “createPOLineItems”. The composite services,, in turn, can then be called by higher-level orchestrations, such as one for processing orders placed through a web site.

1.1 BENEFITS OF SOA

Although we’ve already touched on a few of the inherit benefits of an SOA, we can summarize the major benefits as:

- Business Agility. Business functions, exposed as services, can be stitched together to create new applications using orchestration and workflow products. This allows for rapid creation of new business processes and the ability to quickly alter existing ones. Today’s fast-moving business environment demands such agility in order for companies to remain competitive.

- Code Reuse. The ability to effectively reuse existing functionality constitutes a cost savings. Perhaps equally significant is the impact on quality. Reusing proven and well-tested assets inevitably improves quality.

- Platform Agnostic. Services exposed in a standards-based fashion can bridge between differing platforms and languages. For example, a “getCustomer” service written in Java can now be accessed by a .NET client. The popularity of new interpreted scripting languages such as Ruby and Groovy further intensifies the relevance of having agnostic-based services. This also reduces vendor lock-in and switching costs, and encourages true “best-of-breed” adoption.

- Legacy Enablement. The investment in legacy applications can be preserved by exposing their business rules and functions through service wrappers. This not only saves money, but opens these systems up for more creative purposes. Thus, integration costs are reduced.

- Standardized XML Vocabularies. The XML-centric nature of SOA encourages the development of a common set of well-defined business object documents. By creating a common vocabulary within your enterprise, integration becomes vastly simplified.

Fundamentally, an SOA is about exposing services that facilitate the sharing of information across application and business boundaries. In our example earlier describing Acme Corporation’s new web inquiry form (figure 1), we demonstrated a business process that spanned across several enterprise systems. This is a common requirement and enables the rapid creation or modification of business processes. This translates into faster turnaround time for the introduction of new products; cost savings derived from greater productivity and efficiency; and faster response to changes in market conditions.

The benefits of moving to an SOA are real, but how can they be realized? The next article will cover what elements are necessary to succeed with an SOA.

Wednesday, August 15, 2007

Using Embedded ServiceMix Client

In the example presented in this article, I'm going to provide an example of calling ServiceMix File and XSTL components. The SM file component example is InOnly (i.e., fire-and-forget), whereas the XSLT example is asynchronous (block-and-wait for response).

We'll first look at the setup.xml file, which sets up the component definitions and ServiceMix JBI container.

setup.xml

<beans xmlns:sm="http://servicemix.apache.org/config/1.0"

xmlns:foo="http://servicemix.org/cheese"

xmlns:eip="http://servicemix.apache.org/eip/1.0">

<!-- the JBI container -->

<sm:container id="jbi" embedded="true">

<sm:activationSpecs>

<!-- returns dummy xml for testing -->

<sm:activationSpec componentName="test" service="foo:test" >

<sm:component>

<bean class="org.apache.servicemix.components.xslt.XsltComponent">

<property name="xsltResource" value="classpath:resources/test.xslt"/>

</bean>

</sm:component>

</sm:activationSpec>

<sm:activationSpec componentName="receiver"

service="foo:receiver">

<sm:component>

<bean

class="org.apache.servicemix.tck.ReceiverComponent" />

</sm:component>

</sm:activationSpec>

<sm:activationSpec componentName="fileSender"

service="foo:fileSender">

<sm:component>

<bean

class="org.apache.servicemix.components.file.FileWriter">

<property name="directory" value="/tmp/test" />

<property name="marshaler">

<bean

class="org.apache.servicemix.components.util.DefaultFileMarshaler">

<property name="fileName">

<bean

class="org.apache.servicemix.expression.JaxenStringXPathExpression">

<constructor-arg

value="'test.xml'" />

</bean>

</property>

</bean>

</property>

</bean>

</sm:component>

</sm:activationSpec>

</sm:activationSpecs>

</sm:container>

<bean id="client"

class="org.apache.servicemix.client.DefaultServiceMixClient">

<constructor-arg ref="jbi" />

</bean>

</beans>

In the above, you can see that I've setup two components -- the XSLT service named "test" and the file writer component named "fileSender". In the case of the XSLT component, the test.xslt file associated with it simply returns the following xml:

<hello>

<text>Hi!</text>

</hello>

In the case of the fileSender, it is configured to write a file to /tmp/test called "test.xml" with the contents of what was passed when it was invoked.

Next, we'll look at a jUnit test that illustrates how a ServiceMix instance can be instantiated and run through a Java client.

ServiceMixClientTest.java

package company.cop.check.test;

import javax.xml.namespace.QName;

import junit.framework.TestCase;

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

import org.apache.servicemix.client.ServiceMixClient;

import org.apache.servicemix.jbi.container.SpringJBIContainer;

import org.apache.servicemix.jbi.jaxp.SourceTransformer;

import org.apache.servicemix.jbi.resolver.EndpointResolver;

import org.apache.servicemix.tck.Receiver;

import org.apache.xbean.spring.context.ClassPathXmlApplicationContext;

import org.springframework.context.support.AbstractXmlApplicationContext;

import org.w3c.dom.Document;

/**

* @version $Revision: 1.2 $

*/

public class ServiceMixClientTest extends TestCase {

private static final transient Log LOG = LogFactory.getLog(ServiceMixClientTest.class);

protected AbstractXmlApplicationContext context;

protected ServiceMixClient client;

protected Receiver receiver;

protected SourceTransformer transformer = new SourceTransformer();

public void testCallingComponent() throws Exception {

QName service = new QName("http://servicemix.org/cheese", "fileSender");

EndpointResolver resolver = client.createResolverForService(service);

client.send(resolver, null, null, "world ");

}

public void testXslt() throws Exception {

QName service = new QName("http://servicemix.org/cheese", "test");

EndpointResolver resolver = client.createResolverForService(service);

Document response = (Document) client.request(resolver, null, null, "world ");

assertEquals(response.getChildNodes().item(0).getTextContent(), "Hi!");

System.out.println("Response: " + response.getChildNodes().item(0).getTextContent());

}

protected void setUp() throws Exception {

context = createBeanFactory();

client = getClient();

SpringJBIContainer jbi = (SpringJBIContainer) getBean("jbi");

}

protected ServiceMixClient getClient() throws Exception {

return (ServiceMixClient) getBean("client");

}

protected void tearDown() throws Exception {

super.tearDown();

if (context != null) {

context.close();

}

}

protected Object getBean(String name) {

Object answer = context.getBean(name);

assertNotNull("Could not find object in Spring for key: " + name, answer);

return answer;

}

protected AbstractXmlApplicationContext createBeanFactory() {

return new ClassPathXmlApplicationContext("resources/setup.xml");

}

}

The setup() method, called automatically by jUnit prior to each test, establishes the JBI ServiceMix container/server. Then, each of the test methods are called. The testCallingComponent() method sends a message to the "fileSender" ServiceMix component/service. It identifies the target service by QName, which is the namespace and name provided the service in the setup.xml file. The client.send() method sends a message using the provided parameters, notice the last of which is the XML packet. When run, this should result in a file called "test.xml" being written. The contents should be what was passed in the client.send operation, namely "

The testXslt() method is used to invoke the test XSLT service defined in setup.xml. Unlike the fileWriter, this is a synchronous call, as we anticipate the transformed XML being sent back to us. To do so, the client.request() method is used, and it will return back the transformed XML in the form of a DOM Document. That Document object is then traversed to printout the text contents of the "text" node. This should be "Hi!", as defined in the XSLT document.

Final Thoughts

As you can see, it's pretty straightforward to invoke ServiceMix within a standalone Java client, without requiring ServiceMix to be running as a stand-alone instance. Doing this, however, does impose some performance penalties, as instantiating the JBI container does consume some overhead.

We'll explore calling remote services from a running ServiceMix instance in another article. Another intriguing option would be to use a stateful EJB to maintain a ServiceMix context, but there could be some tricky concurrency issues with that approach.

Add to Technorati Favorites

Monday, August 13, 2007

SOA Design Principles

Service Loose Coupling

Coupling refers to a connection or relationship between two things. A measure of coupling is thus comparable to a level of dependency. In SOA, the objective is to reduce ("loosening") dependencies between the service contract, it's implementation, and its service consumers. This makes a service more reusable and flexible. A well-defined meta-data contract, such as provided through a web service WSDL, defines the interfaces with service.

Service Abstraction

Sometimes known as "data hiding", this principle emphasizes the need to hide as much as possible the underlying details of a service. This works in conjunction with Service Loose Coupling to help make services more reusable. This also permits changes to be made to the service without unduly impacting clients. In essence, the service should be a "black-box" that performs its operations without any understanding of the mechanics behind it.

Service Reusability

A service should not be specific to a single functional context. Instead, it should be "agnostic", and support numerous usage scenarios, without having functional dependencies. One challenge with service reusability is that it often promotes more granular-type services, because a service with narrower focus is often more easily reused. This can have performance implications and must be weighed carefully.

Service Autonomy

A service should exercise a high level of control over its underlying runtime environment. In other words, it should managed within its own environment, and be able to support a high degree of reliability and performance. The client should no visibility or concern over the environment in which the service is run.

Service Statelessness

Services should minimize resource consumption by deferring the management of state information, when necessary. A service that maintains state also likely reduces reusability, as it then is likely imposing a certain process or functional dependency on the service.

Service Discoverability

Services should be supplemented with communication meta-data by which they can be effectively discovered and interpreted. In other words, services should be defined within a common registry that enables users to readily understand its purpose and requirements. Obviously, this need becomes more pronounced as an organization grows and spawns multiple development groups or organizations.

Service Composability

Services should be effective composition participants, regardless of the size and complexity of the composition. For instance, this assumes that it will include comprehensive exception handling features and support multiple run-time scenarios. It also requires that the service be capable of handling multiple, concurrent transactions and be highly performing. Using a consistent invocation pattern is also instrumental in this.

Add to Technorati Favorites

Friday, August 3, 2007

What's wrong with BPEL?

* BPEL, in an effort to support all vendors/platforms, only works with SOAP-based services. This means that every time an invocation is required, say to call an FTP or email adapter, the adapter/service must be exposed as a web service. That imposes a lot of extra overhead on the developer, and for internal services, seems onerous. Most of us don't live in a world where everything is automatically exposed as web services (I'm a big proponent of SOAP/Web services, but also realistic).

* Passing of data between activities/steps within a BPEL orchestration must be done using XML and WSDL constructs. Complex data transformations and assignments must frequently be performed using XSL, and developers who aren't well versed in the nuances of XSD schemas (in particular, namespaces) can become frustrated. Java and .NET developers must often wonder why they can't just pass a data class objects between the nodes.

* BPEL, by it's definition, is a closed standard. If a product is 100% BPEL compliant, extending it by supporting direct API java calls, for instance, automatically makes your orchestrations non-compliant, thus losing the benefits of the vendor neutrality and compatibility BPEL promises. Then what's the point of using BPEL?

* BPEL-based products are often app-server centric, meaning they have to be deployed an hosted. This often poses challenges from a testing standpoint, as developers must deploy their orchestration prior to being able to test it. Again, this impacts developer productivity in a negative way.

* BPEL introduces often very confusing nomenclature that must be learned. Concepts like "Partner Links", "Partner Link Types", "Partner Roles", "Port Types" and "Correlation Sets" are often not intuitive, and require a deep understanding of all things WSDL.

* Some products, such as Intalio, recognize the difficulties in modeling things natively in BPEL, so they use alternative, presumably more intuitive, solutions such as BPML. While that address some of the developer productivity concerns mentioned, they then rely on translation/code generation to output BPEL code. That "black-box" translation steps seems like a source of ongoing problems (the developer must, for example, try and determine why a diagram in BPML got translated into such-and-such BPEL code). Seems like a headache waiting to happen.

Add to Technorati Favorites

Thursday, May 17, 2007

Jitterbit Screencast Demonstration

I created a little flash screencast that demonstrates how Jitterbit can be used in tandem with Adempiere, an open-source ERP application (it's a fork of Compiere). It illustrates how to run a database query from an Adempiere table, transform it into a CSV delimited text file, and then publish it to an FTP site. Hope you enjoy it!

Friday, May 4, 2007

Adempiere Integration Framework: Part 1

External services can be any system that Adempiere needs to communicate with. For example, integrating with a shipper or credit card processor comes to mind. Or, synchronizing data with popular CRM systems such as Salesforce.com or SugarCRM. While people have been doing this sort of thing with Compiere/Adempiere for a long time, more often than not, it's a point-to-point integration. The disadvantage to this approach is that it can quickly become unmanageable, and tightly couples the integration code into Adempiere. This limits flexibility, and can be fragile and difficult to maintain.

The increasing popularity of Enterprise Service Buses (ESB) has occurred because of the recognized limitations and issues surrounding point-to-point integrations. In addition, ESB's generally provide a bevy of reusable adapters, such as SOAP, HTTP, Email, FTP etc., that eliminate the need to recode these solutions over-and-over again. Often, they also have sophisticated business process orchestration features such as BPEL, built-in, to help design and manage complex workflows. Popular open-source ESB's include Mule, Apache Servicemix, OpenESB , PetalsESB and Celtix.

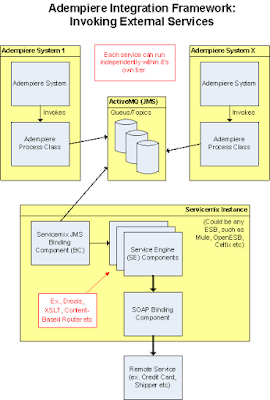

In the scenario for today's topic I want to discuss a framework that can be use invoking external services from within Adempiere using a Process. The Process, in turn, publishes the outbound request into a JMS queue or topic. From there, it is lifted for processing by an ESB. The ESB, using it's rich set of pre-built adapters, transforms the request to the destination format, and submits it for processing. This process can be asynchronous or synchronous, depending up the client's requirements.

Why not just invoke the ESB directly from within the Adempiere Process. Again, it's about keeping things loosely coupled. We might want to change ESB's at some future point, and hardwiring specific ESB code (most likely proprietary in nature, using it's APIs) into the Adempiere Process callout limits such flexibility.

Using JMS, however, we can define a standard XML schema for the data transfer. As long as the request/response adheres to the proper format, the Adempiere Process could really care less about the implementation details.

Below is a diagram of what I'm describing:

As you can see, in this diagram, I'm using the Servicemix ESB (which I do have a preference for, given it's JBI-compliance, maturity, breadth, and Spring-based architecture). A Servicemix JMS component (in JBI parlance, that's a "Binding Component") lifts the JMS message sent by the Adempiere Process, then performs some transformation and business rule processing using pre-built components designed for that purpose ("Service Engines", in JBI). Then, when the XML is in the appropriate format/schema, a SOAP adapter is used to call the external web service that's providing the service desired.

This architecture is highly flexible, and allows for a wide variety of implementations. For example, the JMS and Servicemix instances can be running in a distributed fashion on one more services (both ActiveMQ and Servicemix provide clustering capabilities as well).

In my next article, I'll describe how an ESB can be used to receive inbound request generated externally from Adempiere. For example, a sales order that is received from an external channel, such as an ecommerce web site.

As always, I welcome your thoughts/comments.

jeff

Add to Technorati Favorites

Add to Digg

Tuesday, April 17, 2007

Using Adempiere's Data Dictionary

As part of the Web Services initiative I'm working on, it's necessary to dynamically generate the XML schemas for those objects that are required to be exposed as web services. This can be done easily by using the org.compiere.model.MTable and org.compiere.model.MColumn classes. The combination of these provides you the properties ofa particular Adempiere/Compiere object, including such relevant information as: column name; column type, column length; default values; and reference information.

Let's take a specific example -- the C_Invoice object, and determine it's columns and associated properties. Here's some Java pseudo-code that illustrates how this can be done:

MTable mTable = MTable.get(Env.getCtx(), "C_Invoice");

MColumn mcolumn[] = mTable.getColumns(true);

for (int i = 0; i < mcolumn.length; i++) {

System.out.println("Column is: " + mcolumn[i].getName())

System.out.println(" Desc is: " + mcolumn[i].getName());

System.out.println(" Length is: " + mcolumn[i].getName());

}

As you can see, it's very easy to interrogate what fields and properties belong to a given object, and it's completely dynamic, driven by the current configuration specified in the data dictionary.

For the web services initiative, I'm using this data to dynamically generate the WSDL schema.

jeff

Add to Technorati Favorites

Add to Digg

Thursday, April 12, 2007

Adempiere (Compiere) Web Services

<idal:mField idal:name="DatePrinted">

<idal:newvalue/>

</idal:mField>

<idal:mField idal:name="Processed">

<idal:newvalue>true</idal:newvalue>

</idal:mField>

<idal:mField idal:name="DateAcct">

<idal:newvalue>11/01/2003</idal:newvalue>

</idal:mField>

<idal:mField idal:name="IsPrinted">

<idal:newvalue>false</idal:newvalue>

</idal:mField>

<idal:mField idal:name="IsInvoiced">

<idal:newvalue>true</idal:newvalue>

</idal:mField>

This is really not ideal, as it's very verbose, and it circumvents the power of XML, which is it's self-describing vocabulary.

A more preferred approach to the above is something like:

<DatePrinted/>

<Processed>true</Processed>

<DateAcct>11/01/2003</DateAcct>

<IsPrinted>false</isPrinted>

<isInvoiced>true</isInvoice>

Obviously, in addition to being more succinct, it's also more human readable, which is an important consideration.

Recently, after a taking a few months off on the project, I believe it is possible to relatively easily dynamically generate a SOAP WSDL that is fully expressive, and accomodates changes to the Compiere/Adempiere data dictionary. Further, by using XSD extension mechanisms, we can limit the number of operations, or verbs, to a reasonable number. So, under this scenario, we'd have operations (verbs) such as "Create", "Delete", "Update" and "Search", which can be applied to common documents (nouns) such as PO, Invoice etc.

Another key consideration is security. Passing username/password values, in clear text, even over SSL, is not sufficiently secure for most organizations. After all, the SOAP XML is probably littered throughout log files, leaving credentials easily exposed in clear text to any would-be hacker. Instead, the preferred approach is to use WS-Security, which through profiles, can support a variety of encryption solutions. For example, a binary hash token can be used that is highly secure. Further, WS-Security is one of the few WS-* standards that is actually more-or-less universally supported. For instance, the UsernameToken WS-Security standard is nicely supported by both .NET and Java (Axis Rampart), and presumably others as well.

Notwithstanding my earlier contention that SOAP is the best way to go in an enterprise environment, it's foolish to think everyone concurs with me on that. So, it also makes sense to expose the services through other protocols as well, including JMS and REST (or even email). My previous approach to using Axis2 really limited the solution to SOAP. What I'm proposing this time around is to use Servicemix as the integration broker, whereby it could receive the inbound messages in a variety of protocols. Thus, we're no longer just limited to SOAP.

ARCHITECTURE

From an architecture standpoint, what I had in mind was:

In the above scenario, Servicemix "binding components" are used to manage the inbound requests. From there, it's routed through a profile validation services (I'll explain more of this in a future posting), then ultimately to the implementation bean/service that will fulfill the request.

RELEASE PHASES

Obviously, this is a fairly ambitious undertaking. So, I propose the follwing phases:

Phase 1:

- Authorization/Authentication via Profile service

- Dynamic WSDL Generation

- Core API set (Create, Delete, Update, Insert)

- WS-I Basic Compatibility for SOAP.

- WS-Security Authentication using Mediator Service (Apache Synapse or Spring-WS, which has strong WS-Security support)

- Additional API Calls.

- Performance Improvements

I anticipate releasing the code under the same license as Adempiere, or even BSD, if appropriate. A Sourceforge project will be launched as soon as enough code exists to seed the project (nobody is interested in vaporware projects -- real code has to exist to excite the community). I have begun working on the code, an expect anticipate a May delivery timeframe for the first seed.

Add to Technorati Favorites

Add to Digg

Welcome!

This blog will mostly focus on the new technologies I've been working on lately. Hopefully you'll find some of my postings useful, and I'll always appreciate feedback.

Thanks!

jeff davis