Background

While there are many very good Docker tutorials currently available, I found that many are either too simplistic in the scenarios offered, or in some cases, too complex for my liking. Thus, I decided to write my own tutorial that describes a multi-tier architecture configured using Docker.

Prerequisites

This tutorial assumes that you have successfully installed Git and Docker. I’m running Boot2Docker on a Mac, which enables Docker to be run nearly as though it were installed natively on the Mac (on the PC, Boot2Docker isn’t quite as straightforward). I assume some basic level of familiarity with Docker (a good source of information is https://docs.docker.com/articles/basics/).

Scenario

The scenario I developed for purposes of this tutorial is depicted below:

As you can see, it involves multiple tiers:

MongoDB: This is our persistence layer that will be populated with some demo data representing customers.

MongoDB: This is our persistence layer that will be populated with some demo data representing customers.

API Server: This tier represents Restful API services. It exposes the mongo data packaged as JSON (for purposes of this tutorial, it really doesn’t use any business logic as would normally be present). This tier is developed in Java using Spring Web Services and Spring Data.

WebClient: This is a really simple tier that demonstrates a web application written in Google’s Polymer UI framework. All of the business logic resides in Javascript, and a CORS tunnel is used to then access the API data from the services layer.

I’m not going to spend much time on the implementation of each tier, as it’s not really relevant for purposes of this Docker tutorial. I’ll touch on some of the code logic briefly just to set the context. Let’s begin by looking at how the persistence layer was configured.

MongoDB Configuration

Note: The project code for this tier is located at: https://github.com/dajevu/docker-mongo.

Dockerhub contains an official image for mongo that we’ll use as our starting point (https://registry.hub.docker.com/u/dockerfile/mongodb/). In case we need to build upon what is installed in this image, I decided to clone the image by simply copying its Dockerfile (images can be either binary or declaratively via a text Dockerfile). Here’s the Dockerfile:

Line 5 identifies the base image used for the mongo installation - this being the official dockerfile/ubuntu image. Since no version was specified, we’re grabbing the latest.

Note: Comments in Dockerfiles start with #.

Line 8 begins the installation of the mongo database, along with some additional tools such s curl, git etc. Ubuntu’s apt-get is Ubuntu’s standard package management tool.

Lines 16 and 18 setup some mount points for where the mongo database files will reside (along with a git mount point in case we need it later). On line 21, we set the working directory to be that data mount point location (from line 16).

And lastly, we identify some ports that will need to be exposed by Mongo (lines 27 & 28).

Assuming you have grabbed the code from my git repository (https://github.com/dajevu/docker-mongo), you can launch launch this Docker image by running the script runDockerMongo.sh (in Windows Boot2Docker, you’ll need to fetch the code within the VM container that Docker is running on). That script simply contains the following:

docker run -t -d -p 27017:27017 --name mongodb jeffdavisco/mongodb mongod --rest --httpinterface --smallfiles

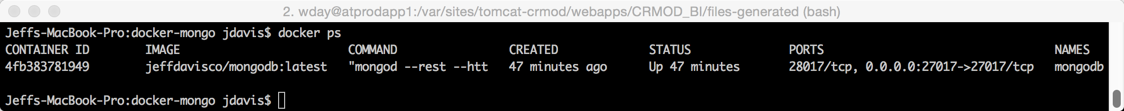

When run, it will use the Dockerfile present in the directory to launch the container. You can confirm it’s running by using the docker ps command:

As you can see from the above, in this case it launched Mongo using container Id of 4fb383781949. Once you have the container Id, you can look at the container’s server logs using:

docker logs --tail="all" 4fb383781949 #container Id

If you don’t see any results from docker ps, that means that the container started but then exited immediately. Try issuing the command docker ps -a - this will identify the container Id of the failed service and you can then use docker logs to identify what went wrong. You will need to remove that container prior to launching a new one using docker rm

Once the Mongo container is running, we can now populate it with some demo data that we’ll use for the API service layer. A directory called northwind-mongo-master is present in project files and it contains a script called mongo-import-remote.sh. Let’s look at the contents of this file:

This script simply runs through each .csv file present in the directory, and use the mongoimport utility to load up the collection. However, there is one little wrinkle. Since mongoimport is connecting to a remote mongo database, the IP address of the remote host is required, and this remote host is the mongo container that was just launched. How did I determine which IP address to use? If you are using Boot2Docker, you can simply run boot2docker ip - this will provide you the IP address of the Docker host you are using. If you are running directly within a host running Docker, you could connect via localhost as your IP address (since that port was exposed to the Docker host when we launched the container).

In order to load the test data, update that script so that it has the proper IP address for your environment. Then you can run the script (mongo-import-remote.sh), and it should populate the mongo database with the sample data. If you are using MongoHub, you can connect to the remote mongo host, and see the following collections available:

Fortunately, we don’t have to go through such hurdles on the remaining containers.

API Service Tier Configuration

The API service is a very simple Restful-based web service that simply exposes some of the mongo data in a JSON-format (granted, you can access mongo directly through it’s restful API, but in most real-world scenarios, you’d never expose that publicly). The web application, written in Java Spring, uses the Spring Data library to access the remote mongo database.

The project files for this tier are located at: https://github.com/dajevu/docker-maven-tomcat. While I won’t cover all of the Spring configuration/setup (after all, this isn’t a Spring demo), let’s briefly look at the main service class that will have an exposed web service:

Using the Spring annotations for Spring web services, we annotate this controller in line 5 so that Spring can expose the subsequent services using the base URL /customer. Then, we define one method that is used to expose all of the customer data from the Mongo collection. The CustomerDAO class contains the logic for interfacing with Mongo.

Let’s look at the Dockerfile used for running this web service (using Jetty as the web server):

As you can see, in line 2 we’re identifying the base image for the API services as Java 7 image. Similar to the mongo Dockerfile, the next thing we do in lines 8-15 is install the various required dependencies such as such git, maven and the mongo client.

Then, unlike with our mongo Dockerfile, we use git to install our Java web application. This is done in lines 21 thru 26. We create a location for our source files, then install them using git clone. Lastly, in line 28, we the shell script called run.sh. Let’s take a look at that scripts contents:

#!/bin/bash

echo `env`

mvn jetty:run

As you can see, it’s pretty minimal. We first echo the environment variables from this container so that, for troubleshooting purposes, can see them when checking the docker logs. Then, we simply launch the web application using mvn jetty:run. Since the application’s source code was already downloaded via git clone, maven will automatically compile the webapp and then launch the jetty web server.

Now, you maybe wondering, how does the web service know how to connect to the Mongo database? While we exposed the Mongo database port to the docker host, how is the connection defined within the java app so that it points to the correct location. This is done by using the environment variables automatically created when you specify a dependency/link between two containers. To get an understanding of how this is accomplished, let’s look at the startup script used to launch the container, runDockerMaven.sh:

docker run -d --name tomcat-maven -p 8080:8080

\ —link mongodb:mongodb jeffdavisco/tomcat-maven:latest

\ /local/git/docker-maven-tomcat/run.sh

As you can see, the -link option is used to notify docker that this container has a dependency an another container, in this case, our Mongo instance. When present, the -link option will create a set of environment variables that get populated when the container is started. Let’s examine what those environment variables look like by examining with the docker logs command (remember, before you can launch this container, the mongo container must first be running):

MONGODB_PORT_28017_TCP_PROTO=tcp

HOSTNAME=f81d981f5e9e

MONGODB_NAME=/tomcat-maven/mongodb

MONGODB_PORT_27017_TCP=tcp://172.17.0.2:27017

MONGODB_PORT_28017_TCP_PORT=28017

MONGODB_PORT=tcp://172.17.0.2:27017

MONGODB_PORT_27017_TCP_PORT=27017

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

PWD=/local/git/docker-maven-tomcat

MONGODB_PORT_27017_TCP_PROTO=tcp

JAVA_HOME=/usr/lib/jvm/java-7-oracle MONGODB_PORT_28017_TCP_ADDR=172.17.0.2 MONGODB_PORT_28017_TCP=tcp://172.17.0.2:28017

MONGODB_PORT_27017_TCP_ADDR=172.17.0.2

The environment variables starting with MONGODB represent those created through the link. How did it know to prefix was MONGODB? That’s simply because, when we launched the mongo container, we specified the optional —name parameter that provided a name alias for that container.

Now, how is that incorporated into the API service? When we define the Spring Data properties for accessing mongo, we specify that an environment variable is used to identify the host. This is done in the spring-data.xml file (located under src/main/resources in the project code).

threads-allowed-to-block-for-connection-multiplier="4"

connect-timeout="1000"

max-wait-time="1500"

auto-connect-retry="true"

socket-keep-alive="true"

socket-timeout="1500"

slave-ok="true"

write-number="1"

write-timeout="0"

write-fsync="true" />

So, when we startup the container, all that is required is to launch it using runDockerMaven.sh. Let’s confirm that the service is working by accessing the service via the browser using:

http://192.168.59.103:8080/service/customer/

http://192.168.59.103:8080/service/customer/

In your environment, if you are using Boot2Docker, run boot2docker ip to identify the IP address in which the service is exposed. If running directly on a docker host, you should be able to specify localhost. This should bring back some JSON customer data such as:

Now, let’s look at the last of the containers, the one used for exposing the data via a web page.

Web Application Tier

The web application tier is comprised of a lightweight Python web server that just serves up a static HTML page along with corresponding Javascript. The page uses Google’s Polymer UI framework for displaying the customer results to the browser. In order to permit Polymer to communicate directly with the API service, CORS was configured on on the API server to permit the inbound requests (I wanted to keep the web app tier as lightweight as possible).

Note: The source code/project files for this tier can be found at: https://github.com/dajevu/docker-python

Here is the relevant piece of code from the Javascript for making the remote call:

Obviously, the VMHOST value present in the url property isn’t a valid domain name. Instead, when the Docker container is launched, it will replace that value with the actual IP of the API server. Before we get into the details of this, let’s first examine the Dockerfile used for the Python server:

This Dockerfile is quite similar to the others we’ve been using. Like the mongo container, this one is based on the official ubuntu image. We then specify in lines 11-14 that Python and related libraries are loaded. In line 23-24, we prepare a directory for the git source code. We follow that up by then fetching the code using git clone in line 27, and then set our working directory to that location (line 28). (the CMD command in line 31 is actually ignored because we specify in the launch script which command to run (i.e., it overrides what is in the Dockerfile).

Let’s look at the startup script now used to launch our container:

docker run -d -e VMHOST=192.168.59.103 --name python -p 8000:8000 \

--link tomcat-maven:tomcat jeffdavisco/polymer-python:latest \

/local/git/docker-python/run.sh

A couple of things to note in the above. Notice how I’m passing the IP address of my Boot2Docker instance using the environment flag -e VMHOST=192.168.59.103. Similar to what we needed to do when configuring the API service tier, you will have to modify this for your environment (if using Boot2Docker, run boot2docker ip to find the IP address of your docker host, or if running natively on Linux, you can use localhost). Notice the other thing we are doing is exposing port 8000, which is where our Python web server will be running under. Lastly, we are instructing the docker container to run the run.sh shell script. We’ll look at this next.

The run.sh script contains the following:

#!/bin/bash

# replace placeholder with actual docker host

sed -i "s/VMHOST/$VMHOST/g" post-service/post-service.html

python -m SimpleHTTPServer 8000

The sed command is used for replacing that VMHOST placeholder token with the environment variable passed to the docker container when it was launched ($VMHOST). Once the html file has been updated, we then launch the python server using the command python -m SimpleHTTPServer 8000. After running the startup script, if everything went well, you should de able to then visit in your browser: http://:8000 (where dockeriphost is equal to your boot2docker ip address, or local docker host if on Linux). You should see something like:

You've now completed the tutorial! You have a docker container running mongo; another running an API service; and the last running simple web service.

I hope you've enjoyed this tutorial, and I hope to have a follow-up to it shortly describing how these the contains can be more easily managed using fig (learn more at: http://www.fig.sh/).

No comments:

Post a Comment